After last post I had a few realizations when I compiled raylib and tested the same examples on my aging Macbook Pro that I’ve revived. The module music example crashes the same way! so the RPiZ/3 were innocent. And the colored cubes example is very expensive to run on the Intel Iris 5100 too which is not a slouch. So I had to redo these two tests to make sure I made a correct evaluation.

First, running a different cubes drawing demo on RPiZ resulted in a solid 60 fps:

Also I realized the reason why the textures_bunnymark demo didn’t show any bunnies on the Raspberries is that I had to modify it to emit bunnies with keyboard input since there is no mouse cursor when running native GL like that, but all the bunnies were being spawned at GetMousePosition() which always returns origin of screen in this case and the bunnies stay under the top rectangle!

So now the bunnies are spawned in the center and it works perfectly:

Can render up to 2000 alpha-blended bunnies to the framebuffer while maintaining a solid 60 fps! that’s not bad at all for 2D games. Once we get to 2100 bunnies the performance drops drastically from 60 to around 52 fps. This kind of predictable performance is only possible in native mode, so I consider this experiment a success. 🙌

I’ve always found the RPiZ interesting due to it being so limited and slow (lol) kind of why I like Amiga A500 or Atari ST.

My vision for the RPiZ was to use it like it’s a low level development gaming platform with a respectable GPU compared to older GPUs before DirectX9. The problem is that modern day linux (Raspbian included) is way too much for this little chip that it makes it almost impossible to use for GUI-based development and the super-outdated and bloated X11 tasks the hardware too much that any OpenGL performance is completely unpredictable and choppy.

The RPiZ comes with an ARM11 processor that uses the arm6vl architecture. It has a floating point co-processor (called vfp) and some not-so-useful extensions like running Java byte code in hardware. The RPiZ runs the processor at 1000MHz which sounds like a lot.. but it pales compared to a Pentium III 600MHz for example. I heard it compared to a Pentium II 300MHz with a small cache and a terribly limiting IO bandwidth.

Its GPU is the Video Core IV which on paper supports OpenGL ES 2.0 and uses a tiled-renderer. It’s quite cool that this GPU is entirely detailed in this freely available document. On paper it has a compute capacity of around 24 GFLOPs. The GameCube is said to have 9.4 GFLOPs in comparison. There is no way the VC4 can produce the same rendering level as the GameCube as it doesn’t have dedicated memory or enough bandwidth to do anything like that.

How can we get the true GPU performance with minimal intrusion from the operating system?

- we could boot from baremetal and use the Circle library to access the hardware including the vc4 GPU with OpenGL

- we could run natively from command-line by starting our own framebuffer and using that

I like the first solution as it sounds like a lot of fun and is the fastest, but it has an expensive overhead and iteration during development is going to be a challenge.

I opted to do the second solution as it appeared to be the easiest. I know that SDL can do native build with graphics support but I was able to find a great library similar to one I was developing 2 years ago except it’s actually finished lol and it’s raylib.

Build Raylib with native

I used a fresh DietPi image on RPiZ, to build raylib with native graphics I did:

mkdir build && cd build

cmake .. -DPLATFORM="Raspberry Pi" -DBUILD_EXAMPLES=OFF

make PLATFORM=PLATFORM_RPI

sudo make install

To build the examples there are a few things that need to be done:

- add

atomiclibrary to examples/CMakeLists.txt in the build loop at the end oftarget_link_librariesso it should look like this:target_link_libraries(${example_name} raylib atomic) - exclude two examples from building due to a conflict in GL, add these before

if (${PLATFORM} MATCHES "Android")list(REMOVE_ITEM example_sources ${CMAKE_CURRENT_SOURCE_DIR}/others/rlgl_standalone.c)list(REMOVE_ITEM example_sources ${CMAKE_CURRENT_SOURCE_DIR}/others/raylib_opengl_interop.c)

Then rebuild raylib with examples: -DBUILD_EXAMPLES=ON

Keep in mind if you’re doing this on Raspberry Pi 3, you don’t need any of the changes to build it correctly and you should also build the “DRM” mode rather than “Raspberry Pi” mode which is legacy.

So on RPi3 this is how to build:

cmake .. -DPLATFORM=DRM

make PLATFORM=PLATFORM_DRM

you’ll need a few extra dev libraries to build correctly: libgbm-dev and libdrm-dev

Results

Not great. Mostly due to the driver not being great. OpenGL seems to be missing VAO support and blending is a bit glitchy (running textures_bunnymark shows no bunnies!), also R32G32B32 texture format is not supported which is needed for the skybox example. Also the PiZ hard freezes at random after a while, but this could be my RPiZ only as I always had this issue.

Another odd issue is that running audio_module_playing segfaults.

Other than that, when it works it feels great! instant startup and smooth.

Here’s the spotlight example showing how expensive this is on the GPU even if it was running at 800x450 only:

Here’s the maze example working correctly:

And here’s the cubes example to show how terrible the performance could get, however this demo in particular could be running into a feature reported by the driver to be available but it’s actually running in software but I don’t know that for certain:

Yeah, you could probably make simple graphical demos but this is not what I’d expect from a 24GFLOPs GPU and I blame the vc4 driver.

I tried this same thing on RPi3, compilation runs much faster naturally but the issues graphical and beyond are all there too as it uses the same vc4 driver including the missing bunnies in textures_bunnymark :(

RPi4 might be the one? haven’t tried it yet.

I’ve been using Fedora Kinoite on my main laptop for about a year now. Kinoite is a spin of Fedora Silverblue. This is the first and only immutable operating system I’ve used to date.

To explain why immutability is so important in my view, let’s take a look at some examples from other systems.

Mutability by bad decisions

Let’s start with Windows. In my opinion the worst wide-spread operating system of them all. It’s basically a collection of bad decisions upon bad decisions dating back to the 90s dictated by the requirements of back-compatibility on legacy features that enterprises wish to drag along with them forever.

An example of one of those outdated decisions is that Windows was always designed to be a mutable OS.

What does that mean? you can go into your Windows system folder and delete anything you want. Windows may not stop you. In fact you need to do this every now and then to clean your system folder from 10s of GBs of Windows logs and/or temporary install files that their updater (another broken legacy system) just ignores.

Another example of one of those bad decisions is the registry. You can just mess with it to your hearts content, delete whatever you want, and it’ll mess up the whole system!

Mutability by Design

Linux as an operating system was designed from the ground up to be mutable but it’s done right in my opinion. The user has full complete freedom to do anything they want in the system allowing advanced users to do whatever they can think of.

You want to experiment on a different OS scheduler? a different way to install device drivers? have at it at your own peril.

The drawback of course is that this absolute mutability is hostile to its users. I can’t count how many times I killed my installed distro by attempting to update an NVIDIA display driver.

BSD is an interesting variation on the mutability by design philosophy. It actually achieves better stability and is more friendly to its users simply by being a larger OS that comes with many more batteries included and a slower more sensible evolution process.

The Walled Garden

On the other extreme all modern mainstream phones are powered by immutable systems that require a jailbreak to escape from. An immutable system cannot be changed in anyway by its user, only by the system or software makers themselves issuing updates to it.

An operating system with a walled garden design proved to be vital for stability and long term dependable robust operation. This is part of the reason why we depend on our mobile devices so much. We know they are very unlikely to misbehave or break internally like a Windows laptop often does.

This is also reflected in all Apple OSX devices like Macbooks. They are super dependable and clean.

However these systems come with a large drawback: the user does not really control the system, and the system controls what the user can and cannot do.

Immutable Operating Systems

Between the fragility of mutable systems and the authoritarianism of walled gardens lies a sensible middle.

A system that offers the same immutability you find in OSX/iOS/Android so it’s dependable, robust, and maybe impossible to break by the end user. Yet, it maintains the ability to let the user change most things in their system if they really want to, while also providing a method to reverse these changes non-destructively!

So what are the elements that Fedora Silverblue provides that let’s it achieve all that?

- Immutable system: neither you nor any software can change any system specific files/folders directly as they are protected from writing

- Atomic image updates: the system gets updated as an image rather than the usual package manager approach. This eliminates any issues that may rise from wrong dependencies as well as provide a straight forward way to jump back to a previous image of the system or a future one still in beta to test things out non-destructively

- The system image operates as a version control system via rpm-ostree which acts as a package manager of sorts. It allows you to push changes on top of current system image, this way you can apply any system changes you need (such as install NVIDIA drivers or any tools you may need in the OS) and these changes “shadow” any existing data. At anytime you can revert or cherry-pick these changes or altogether discard all commits and restore original system image. When system image is updated, your committed changes get pushed on top of the new image.

- You may install any software you want from flathub (flatpak is supported by default) and you can also install snapd by committing the change on top of the system image using rpm-ostree to enable snapcraft. And finally my favorite method: AppImage

- For developers, one of my favorite features in Kinoite/Silverblue is toolbox. You can create as many toolboxes as you want each acts as a self-contained mutable install of Fedora/Centos where you can use dnf to your hearts content and can even install and run GUI applications seemlessly like VS Code. I naturally setup a toolbox per project and can go crazy in any of these toolboxes without worrying about my actual system getting poisoned in the process

In my opinion this is the perfect developer OS: as solid as OSX or iOS without sacrificing flexibility to change the system, best dev containers system I’ve used so far (much better than manually creating dev containers), and it still works perfectly for gaming by installing steam using rpm-ostree and steam runs in its own sandbox so that’s all you need.

However it’s anything but perfect. Here are some of the challenges I’ve run into so far:

- sometimes when an OS image update is available, some changes in it conflict with packages you installed using rpm-ostree and it fails to update as a result. The error and info you get are vague but the easiest way is to simply uninstall whichever packages are conflicting with the update

- I could not for the life of me get an NVIDIA RTX GPU to work via Thunderbolt, I followed every single guide I found and eventually gave up and installed Windows on an external drive for playing newer games

- Running GUI applications from within toolbox works, however there is a really annoying bug that occurs when you quickly move between app UI elements with the mouse, the whole system pauses for a second (mouse included) and resumes after. This seems to be much worst in electron apps sigh

- Updating your software works from Fedora’s software manager (Discover) but sometimes some updates just fail for no apparent reason, retrying gets those updates to succeed most of the time

- Can’t change the wallpaper in the Login Screen as it’s in a system image path (might be a Kinoite specific issue?)

- Installing Lutris and other game launchers (ubi, epic, etc) is problematic as wine is not part of the default system image and must be applied using rpm-ostree

And that’s that! since Silverblue there has been other immutable Linux distros, this awesome list keeps track of everything immutable.

GoVolDot is out! Released it a few days ago after not doing anything with it for a few weeks.

I have this weird release hesitation sometimes, I think I’m worried to let a project go, let it fly out of the window into the wild harsh world.

GoVolDot was a very interesting experience despite its apparent simplicity. The goal I had for it besides that I always wanted to remake this game and actually play it, is to run a project through an entire production cycle in preparation for bigger things in the future.

What Went Wrong 🤧

Estimation Fail

My estimate for this project from beginnings to release was about 2 weeks. My actual total dev time was around 4 weeks of full time work.

While this is accurate for gamedev (2x estimated time). It still feels a little too much for a minimalist 2D shooter!

Some excuses:

- I was honestly surprised how many enemies with unique behaviors there were, around 25 unique behavior enemies. Took me full days of work for the most complex enemies.

- Levels had very specific sequences for the game and the boss battles and I tried to reproduce them accurately. There are a LOT of events, so it took most of a day to play that level in the original game then clone all the events into my spread-editor.

- I was also using Godot for a full-scale game for the first time, so had a lot to learn there and attempted many different ways to do things

Messy assets

The project started quite organically so I threw together a large texture in affinity designer and used their sliced exports to spit out spritesheets per-object. However as the game scaled up to include more and more stuff, that simple process turned into a bit of a time-hole and produced many small sprite files sometimes a few pixels wide. I should have developed a better pipeline for that stuff.

![]()

Unfruitful pursuits

I spent a lot of time playing around with different ideas and directions. Attempted to re-mix Volguard’s music theme twice then gave up due to not really being any good at music lol.

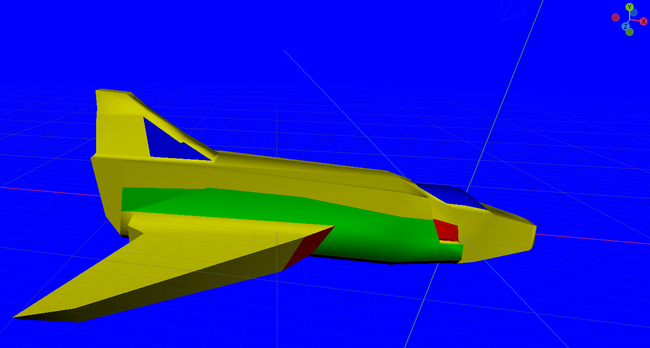

Here I had this crazy idea to switch to 3D for intro, syncretize, and reinforcement sequences:

And here is a taste of that awful remix I mentioned, you’ve been warned:

What Went Right! 🕺

Nailed the feels!

I got the feeling just right! the game is not 100% one-to-one to the original Volguard but it’s close enough - I’d say around 75% accurate.

There are a few bugs here and there but nothing major I’m aware of. All in all, it’s a playable game and one that I can replay myself and still be challenged and have some fun 🙂

Also I really like the font:

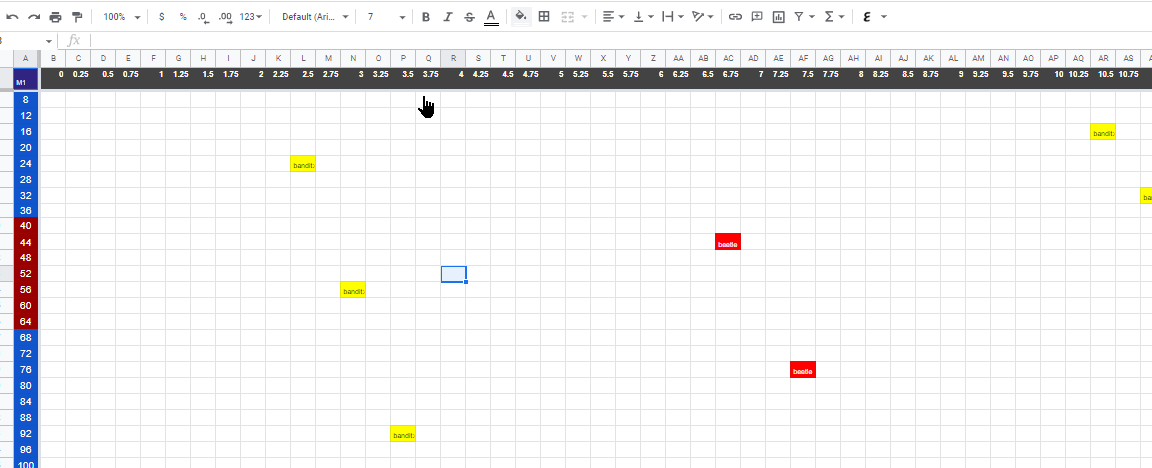

Spread-editor?

For GoVolDot I needed a way to describe precise sequences of events across a long timeline. I could not at the time come up with a way to build that into Godot quickly, but for whatever reason I thought a Google spreadsheet might be ideal for this and it’s immediately available!

Horizontal-axis is time at 0.25 secs steps. Vertical represents Y coordinate to spawn the enemy.

I could embed metadata about each spawned enemy behavior/look in its cell using this smooth-brain format: bandit:edir=-45:vel=[-80 0]

This then gets dumped into a CSV file… yes.. into a specific path.

Then from there a constantly-running python script watching for any mission file changes, detects the change, parses the CSV and throws it into a GDScript file.

Then the game parses the actual CSV data at runtime and turns them into game events.

As hilariously complex as this spread-editor was, it actually worked and once all the moving parts were in-place I forgot it was there. So I consider it a win 💃

Scope Discipline

Did I already mention I wanted to turn parts of GoVolDot into 3D sequences? yeah, I’m glad I didn’t dive into that hole.

Also, multiplayer support.

Coroutines

In GoVolDot I used coroutines for enemies behavior (written a post about that), and it simplified things a lot. I re-used that approach as many times as I could! it doesn’t work with all types of behaviors though. I found that if behavior needs to change based on external events it’s better to just implement that using a traditional state machine to avoid having to pass around variables for the coroutine to check against.

Conclusion

I am happy with what I got at the end. This project has been on my mind since the day I had a “real” computer as I used to call x86 PCs when I was 19 years old.

Consider this checkbox.. checked!

And I gotta say, I’m really impressed with the level of depth and attention to detail this old game has! it’s beyond anything else I’ve had on my PC-6001 at the time and to imagine that the original developers created this game using Z80 assembly? and somehow managed to cram all required data and all those behaviors into 64KB!

I’ve recently decided to dedicate myself to my passion in GameDev and attempt to make it self-employed. This was approximately 3 weeks ago.

I will try to write weekly or bi-weekly updates logging what I’ve done so far.

Week 1: Set my heart a flutter

I’ve been preping this for a while and one unfinished thing I had to get through is figuring out a suitable pipeline for me to develop Android apps for tooling that I want to manage my time and budget.

The last thing I’ve done in this domain is attempt to utilize Python for Android app making, which is possible but what I tried was horrendous in its own way. I’ve looked at both kivy and BeeWare on my Fedora Silverblue laptop.

Immediately ran into issues with kivy that made it a pain to get things running, so I moved on to BeeWare. Now, BeeWare works but there it’s anything but focused and compact. Lots of moving parts and things to set up and when you finally get it working, there isn’t much documentation and I didn’t feel like I got it after doing the tutorials they had available which by the way are incomplete and outdated.

I hate to say it but for the first time, Python failed me. So I decided to move on and focus on modern programming languages that are not derivatives or layers on top of Java like Kotlin is because I dislike Java and its ecosystem. I remember hearing about Flutter somewhere so I jumped there and it’s based on this current gen language called Dart. I liked Dart immediately as it reminded me of TypeScript.

When I started doing the tutorials for Flutter, it just clicked and I got what the high-level design was. You define UI layouts in a similar way to writing a JSON spec. Constructors within constructors, kinda like this:

Widget build(BuildContext context) {

return MaterialApp(

title: 'Hello World',

home: Scaffold(

appBar: AppBar(title: const Text('Hello World'),

body: const Center(

child: Text('Hello World!'),

..etc

It gets a little too LISP-like with the parentheses you need to manage, but nothing a good editor can’t figure out.

With this I was able to put together a simple alternative time app that allows setting up timers through Android’s native timers app:

it’s pretty simple, here it is in github. But it demonstrated to me this is totally usable and has a low time cost for developing tools like that.

Week 1: Unfinished pixel business

Last year during the holidays, I started remaking my childhood game as a tribute to it. It’s an old forgotten game called VOLGUARD that ran on my first computer, an NEC PC-6001 Mk2 SR produced and localized in Iraq.

As a kid, that game inspired and awed me. It just seemed to be too much for my humble machine and I could never figure out how they managed to squeeze that much stuff into it.

It appears to me that the VOLGUARD version on PC-6001 was the originally developed one. MSX and PC-8801 both got ports with better graphics but they are missing some vital elements like the intro music.

So my goal is to re-make this game maintaining its exact feel and style using Godot. This first week I picked back up what I was doing last year, I’ve had some good progress going and thought perhaps this is going to be a few days only to finish.. estimated it at 6 days of work to completion..

Week 2: 90% == 50%

There is this funny thing in gamedev, that if you’re working on a project and you believe you’re at 90% progress and only a few things left to do… you still got 90% more to go.

I re-learned this lesson with VOLGUARD. A game I gave 6 days but it took nearly double that to get anywhere close to completion.

The biggest time sink is the enemies behavior. Unlike the majority of shovelware games out there that tend to implement enemies with a single braincell that basically says: “see player? run at and attack player”. VOLGUARD has actual relatively complex behaviors. A ton of them. Out of nearly 28 variants of enemies, there are roughly 20 of them with unique behaviors!

I developed a good pace in implementing these behaviors and tweaking them to approximate the original game. My last blog post was actually based on that.

There are 5 missions. Each introduce new enemies and more variations in behaviors. This game goes to the school of Souls.. you’ll die alot but it’s because you made a mistake and you can do better. Make no mistakes and you’ll breeze right through.

VOLGUARD also had this unique design decision where they allowed you to play any mission out of the 5 at anytime.

At the end of week 2 I had mission 1 fully implemented and tested with all behaviors and enemies, and mission 2 about two-thirds in.

So 6 days in.. progress = 1.5/5.0, not even 50%.

Week 3: The Light of Release

Last week’s work focused on the more complex enemies. I’ve had to revise or re-implement several enemies as later on in the game I started running into variations in behaviors I didn’t account for in my initial implementation.

In Week 3, I finished Mission 2 and was most of the way through Mission 3. In addition to implementation of all the complex enemies. Remaining enemies are easier as they are similar to already implemented enemies with variations here and there making them cost less to implement.

This is when I started seeing some light, that perhaps I could get it released the following week! (this week)